Topics on this page

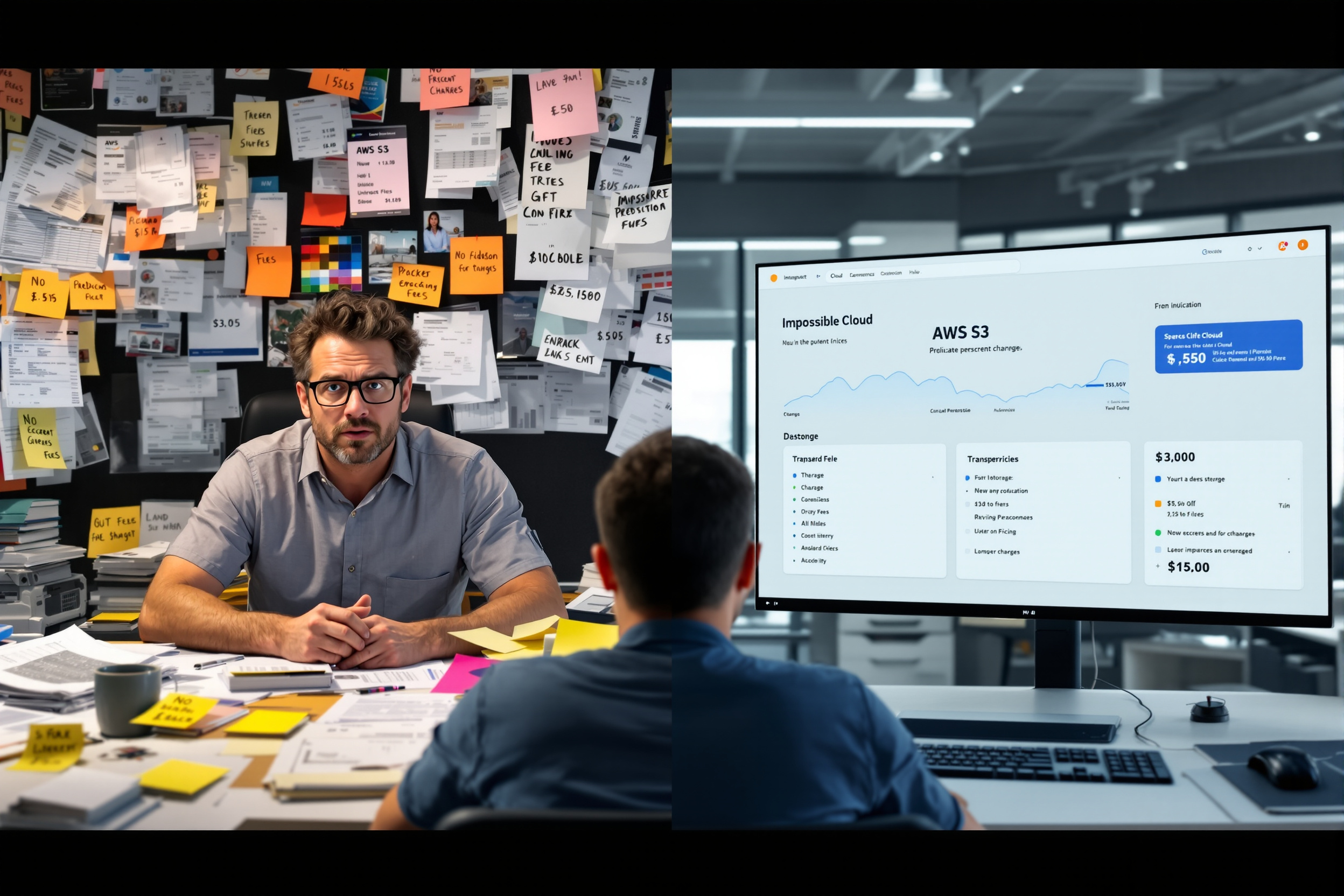

Enterprise IT leaders evaluating S3-compatible solutions often focus on raw speed metrics like latency and IOPS. However, a comprehensive Linode object storage performance benchmark reveals that these numbers tell only half the story. The most significant performance drains are often financial, stemming from unpredictable egress fees that can inflate cloud bills by 20-40%. This article introduces a modern framework for assessing object storage, prioritizing cost predictability, data control, and architectural simplicity as key performance indicators for backup, disaster recovery, and ransomware protection workloads.

Key Takeaways

- A genuine object storage performance benchmark must evaluate Total Cost of Ownership (TCO), not just raw throughput, factoring in hidden egress and API call fees which can inflate costs by over 40%.

- Predictable performance is achieved through an 'Always-Hot' storage model, which eliminates restore delays and delivers up to 20% faster backup speeds compared to tiered systems.

- Full S3-API compatibility is essential for a seamless migration, ensuring that existing backup tools and scripts operate without modification, thereby protecting prior IT investments.

Redefining Performance: From Throughput to Total Cost of Ownership

Standard performance benchmarks measure throughput and latency, yet they miss the largest cost drivers. Organizations report that hidden egress fees and API charges can inflate total cloud storage costs by 3-5x for data-intensive workloads. A forward-thinking performance analysis must therefore include economic metrics to calculate the true Total Cost of Ownership (TCO). Without this, businesses risk underestimating their actual cloud spend by up to 40%.

The most effective storage solutions are cost-efficient by design, eliminating these variable expenses entirely. By choosing a provider with a transparent model featuring zero egress fees, no API call charges, and no minimum storage durations, organizations achieve predictable budgeting. This approach typically reduces cloud storage expenses by 60-80% for backup and recovery workflows. True performance is measured in financial stability, not just gigabits per second.

This economic clarity forms the foundation for a more strategic approach to data management.

The Architectural Advantage: How 'Always-Hot' Storage Delivers Consistency

Performance consistency is critical for backup and disaster recovery, where unpredictable delays can cripple operations. Many cloud storage platforms rely on complex tiering, which introduces restore delays and retrieval fees that act as a performance penalty. An 'Always-Hot' object storage model ensures all data is immediately accessible, eliminating these operational surprises and improving backup performance by up to 20%.

This architecture provides strong read/write consistency and predictable latencies essential for mixed workloads. For MSPs and enterprises, this means third-party backup tools remain stable and recovery time objectives (RTOs) are met without fail. An effective object storage price-performance ratio is one where you do not pay extra to access your own data swiftly.

Here is how an always-hot model simplifies operations:

- Eliminates complex lifecycle policies that can lead to data access issues.

- Prevents API timeouts during urgent, large-scale data restores.

- Ensures 100% of data is available for analytics or compliance checks instantly.

- Reduces operational overhead by removing the need to manage data tiers.

This architectural choice directly impacts your ability to respond to a ransomware attack. With an always-hot model, you can initiate a full recovery in minutes, not hours, a critical factor in business continuity.

Beyond immediate access, seamless integration with existing tools is the next performance hurdle.

S3 Compatibility as a Performance Metric

True S3 compatibility is a critical, yet often overlooked, performance indicator. A drop-in S3 replacement protects years of investment in scripts, applications, and staff training, reducing migration risk to near zero. The ability to switch providers by simply changing an endpoint accelerates time-to-value by 100% compared to solutions requiring code rewrites. This seamless integration is a core tenet of a S3-compatible storage speed comparison.

A truly compatible alternative supports not just basic object operations but also advanced capabilities like versioning, lifecycle management, and Object Lock. This ensures that critical backup and ransomware protection workflows function without modification. Vendor lock-in, often enforced by proprietary APIs and punitive egress fees, is cited as a top concern among 75% of IT leaders. An S3-compatible API combined with zero egress fees provides a built-in exit strategy, preserving your negotiating power and long-term freedom.

This compatibility is the key to unlocking value for channel partners and MSPs.

Enabling MSPs with Predictable Performance and Margins

For Managed Service Providers (MSPs), performance is synonymous with profitability. Unpredictable costs directly erode margins on Backup-as-a-Service (BaaS) and Disaster-Recovery-as-a-Service (DRaaS) offerings. A storage backend with zero egress or API fees is predictable by design, allowing MSPs to quote with confidence and protect their margins, which can increase by over 50%.

This model shifts the narrative from reselling a vendor's product to owning a branded cloud service. A whitelabel-ready platform with a multi-tenant console, robust IAM controls, and API-driven automation is the foundation for a high-margin BaaS business. Predictable costs are a competitive advantage, enabling MSPs to pass savings to clients or increase profitability.

A successful partner program should include these key elements:

- A multi-tenant partner console with granular role-based access control (RBAC).

- Full automation capabilities via API and CLI for seamless integration.

- Custom branding options (domain and UI) to build brand ownership.

- Transparent reporting tools for monitoring usage and margins.

- Zero egress fees to ensure quotes remain profitable month after month.

With the right foundation, MSPs can build services that meet stringent enterprise requirements.

Meeting Enterprise Security and Compliance Demands

Performance in regulated industries extends to security and compliance. Enterprise-ready object storage must provide verifiable security features without compromising speed. This includes multi-layer encryption (in-transit and at-rest) and Immutable Storage with Object Lock, which is now a non-negotiable defense against ransomware for 90% of organizations. These features must be delivered from certified data centers, such as those with SOC 2 and ISO 27001 attestations.

Data control is another critical performance metric, especially for US-based companies. The ability to use country-level geofencing ensures data remains within a predefined region, satisfying compliance needs for sectors like finance and healthcare. A modern low-latency S3 storage solution must also offer robust identity and access management (IAM) with support for external IdPs via SAML/OIDC. This combination of security and data control proves an exit path is always available, preventing vendor lock-in.

Ultimately, a comprehensive benchmark combines these technical and financial metrics into a practical evaluation.

How to Conduct a Real-World Object Storage Benchmark

A modern benchmark moves beyond isolated speed tests to a holistic assessment of TCO and operational readiness. It simulates real-world conditions, including cost modeling for data retrieval and API usage under load. A thorough evaluation can prevent budget overruns of 30% or more. This practical approach ensures you select a solution that performs well both technically and financially.

Use this checklist to guide your next evaluation:

- Model TCO, Not Just Storage Cost: Calculate projected costs for 12 months, including storing, accessing, and retrieving 25% of your total data volume to simulate a recovery event.

- Test S3 API Completeness: Validate that your primary backup and archiving tools work without modification, specifically testing features like Object Lock and versioning.

- Measure Restore Performance: Time a large-scale restore of both small and large files to verify that there are no performance penalties or delays from tiered storage systems.

- Verify Security Controls: Confirm that you can implement granular IAM policies and that the platform holds current compliance certifications like SOC 2.

- Assess the Exit Strategy: Confirm in writing that there are zero egress fees for bulk data retrieval and that the S3-API ensures data portability.

The goal is to identify a solution that offers a predictable cost model and an exit strategy from day one. A successful benchmark leads to a partnership that enhances data control and financial stability.

Ready to see how a TCO-focused approach can transform your storage strategy? Talk to an expert to calculate your savings.

More Links

GWDG presents a performance evaluation of object storage systems, likely focusing on metrics relevant to research and high-performance computing environments.

Statista provides market data and forecasts for the public cloud market in the United States.

Eurostat presents statistics on cloud computing usage by enterprises within the United States.

DLA Piper offers information and resources related to data protection laws in the United States.

Mayer Brown discusses the American Energy Efficiency Act and its implications for data center operators, focusing on sustainability and energy efficiency.

U.S. Chamber of Commerce covers the topic of digitalization in the United States, likely from a business and industry perspective.

.png)

.png)

.png)

.png)

.png)

%201.png)